FineTuning

FineTuning/大模型微调

设置训练参数(使用了Hugging Face的Transformers库中的TrainingArguments类)。

这些参数将用于配置模型训练过程。

training_args = TrainingArguments(

# Learning rate

learning_rate=1.0e-5,

# Number of training epochs

num_train_epochs=1,

# Max steps to train for (each step is a batch of data)

# Overrides num_train_epochs, if not -1

max_steps=max_steps,

# Batch size for training

per_device_train_batch_size=1,

# Directory to save model checkpoints

output_dir=output_dir,

# Other arguments

overwrite_output_dir=False, # Overwrite the content of the output directory

disable_tqdm=False, # Disable progress bars

eval_steps=120, # Number of update steps between two evaluations

save_steps=120, # After # steps model is saved

warmup_steps=1, # Number of warmup steps for learning rate scheduler

per_device_eval_batch_size=1, # Batch size for evaluation

evaluation_strategy="steps",

logging_strategy="steps",

logging_steps=1,

optim="adafactor",

gradient_accumulation_steps = 4,

gradient_checkpointing=False,

# Parameters for early stopping

load_best_model_at_end=True,

save_total_limit=1,

metric_for_best_model="eval_loss",

greater_is_better=False

)

**Qwen2-1.5B 和 Qwen2-1.5B-Instruct 的区别:**

- **Qwen2-1.5B**:这是一个预训练模型。它经过大量的数据训练,学习了一般的语言模式和知识,但没有针对特定的任务进行优化。

- **Qwen2-1.5B-Instruct**:这是一个指令微调模型(什么是指令微调)。它在预训练模型的基础上,经过额外的微调,以便更好地理解和执行自然语言指令。这使得它在处理具体的任务和指令时表现更好。

**预训练和指令微调模型的区别:**

- **预训练模型**:在大规模的通用数据集上进行训练,目的是学习语言的基本规律和知识。它适用于多种任务,但在特定任务上可能不如微调模型表现得好。

- **指令微调模型**:在预训练模型的基础上进行进一步训练,通常在包含特定任务或指令的数据集上进行微调。微调的目的是让模型更好地理解和处理特定的指令或任务,从而在这些任务上表现得更出色。

**选择哪个模型进行微调:**

如果你的目标是对模型进行任务特定的微调,并且你的微调数据集包含了明确的指令或任务,建议选择 **Qwen2-1.5B-Instruct**。这个模型已经经过指令微调,在处理类似的任务时表现更好。

如果你的数据集不包含明确的指令,或者你希望从头开始训练一个适应性更强的模型,可以选择 **Qwen2-1.5B**。然后,你可以在这个基础上进行进一步的微调。

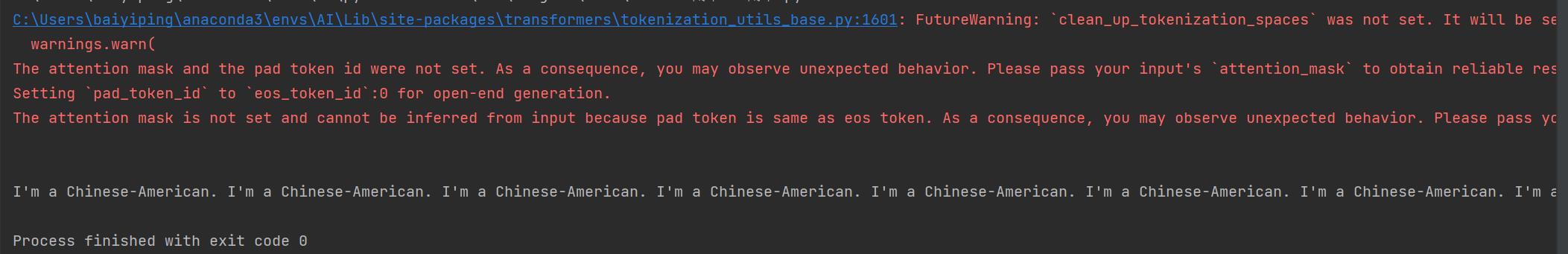

调用Huggingface模型推理:(01)

参考

DeepLearningAI/Finetuning Large Language Models|B站